I pay for an always-on connection to the internet at home. But it's not always on.

So I wrote a little sh script to ping a reliable host and log any packet loss.

Sample output looks like so:

2004-11-08 20:33:58 0 10 packets transmitted, 10 packets received, 0% packet loss 2004-11-08 20:34:07 0 10 packets transmitted, 10 packets received, 0% packet loss 2004-11-08 20:34:16 10 10 packets transmitted, 9 packets received, 10% packet loss 2004-11-08 20:34:26 100 10 packets transmitted, 0 packets received, 100% packet loss 2004-11-08 20:34:45 0 10 packets transmitted, 10 packets received, 0% packet loss 2004-11-08 20:34:54 0 10 packets transmitted, 10 packets received, 0% packet loss

The above sample was very granular: ping 10 times, log it, repeat. The script below pings the host 100 times.

Here is the script so you can use it too.

#!/bin/sh

while [ 1 ]

do

echo `date "+%F %T"`\ `ping -c 100 www.washington.edu|grep transmitted|awk -F' |%' '{print $7, $0}'` >> /var/log/pinger

done

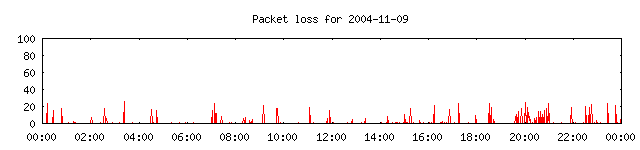

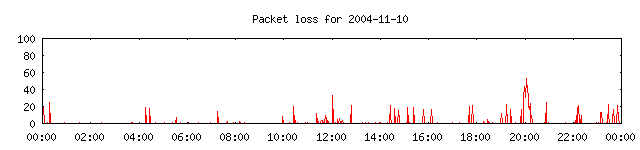

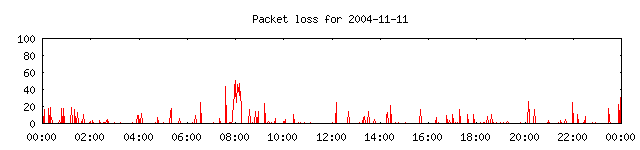

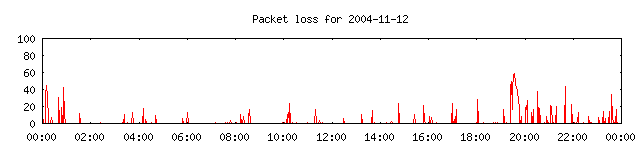

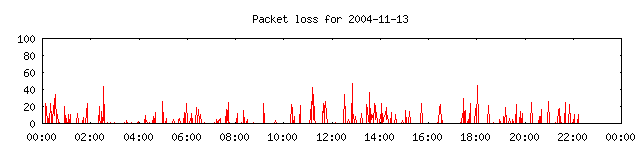

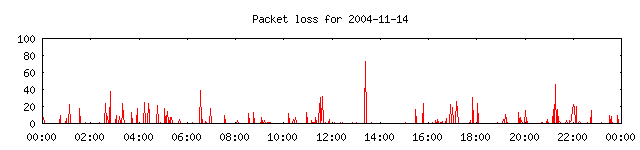

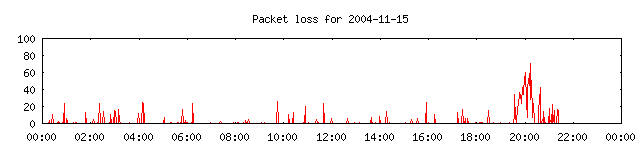

Below are some visualizations of the last few days.

Each was made with this gnuplot script:

#!/bin/sh echo " set timefmt '%Y-%m-%d %H:%M:%S' set xdata time set xrange ['$1 00:00:00':'$1 23:59:59'] set yrange [0:100] set format x '%H:%M' set terminal png small size 640,150 set output 'pinger-$1.png' set nokey set title 'Packet loss for $1' plot \"/var/log/pinger\" using 1:3 with l " > /tmp/pinger.sh.$$ gnuplot /tmp/pinger.sh.$$ cat /tmp/pinger.sh.$$ rm /tmp/pinger.sh.$$

Run it as scriptname yyyy-mm-dd, replacing the y's, m's and d's accordingly.

It has been my subjective experience that whenever I download something, I start loosing packets. One reason for this may be that the outbound capacity (presently limited to 256Kbps with my service) is being saturated and is exceeding predicted amounts. Most "home users" will use their always-on connection in bursts: download a webpage, do nothing for 10-15 seconds, download another web page, do nothign for 10-15 seconds, retrieve mail messages, do nothing for 10-15 seconds, etc. With such behavior for all users, true outbound capacity for N users can be far less than N*256Kbps. But something like the recent Bagle virus that turns vulnurable Windows machines into packet-spewing drones would alter many users' bandwidth usage pattern from bursts to steady streams, usually maxing out at all available capacity for each user. That means n*256Kbps is needed by the service provider (where n ~= N), and until that amount drops to the amount of bandwidth available to the service provider, everyone suffers.

To test my hypothesis, I set out to observe successful bandwidth utilization from my network device during a download. The behavior I've noticed is reminescent of a round-robin bandwidth limiting algorithm. To limit the amount of outgoing bandwidth, one considers all the outgoing packets, and puts them in a queue. The more packets there are, the less of my packets will be coming out per second.

Unfortunately, my experiment suggests that this queue is not really a FIFO, it's a first-lump-in-first-lump-out. That is, I get to send out data at my maximum capacity of 256Kbps for, say, X seconds, but am penialized for the next k*X seconds by not being allowed to send. During this k*X second penalty, other users are permitted to have their X second share. As more users appear, k gets bigger.

This may be desirable, however: Since the queue must be finite, it is easily possible to fill it up and simply drop any new incoming requests. This way getting through would be a game of chance with an extremely poor probability of success, and most users would simply get 0 bandwidth, complain to the service provider, etc. With how things are, they get 0 bandwidth, followed by lots of bandwidth, and back and forth. Perhaps this is more desirable to match the burstable usage pattern expectation -- it may in fact be an artifact of the bandwidth allotment mechanism under extreme duress. There are also be legal and financial consequencees with simply lowering everyones outbound traffic to, say, 64Kbps; although, I would prefer getting less bandwidth with an always-on feel than more bandwidth with an unpredicable sometimes-on feel.

Below is output from the netstat utility which outputs the status of my network device. The supplied parameters cause it to accrue and display data in one second intervals. So every line of text below represents the state in which my network device was for that second. During this experiment, I was downloading a large file and thus, sending acknowledgements of receipt for every packet at ~10KB/s. Take a look:

# netstat 1 -b

input (Total) output

packets errs bytes packets errs bytes colls

195 0 276810 171 0 11410 0

206 0 290862 169 0 11218 0

158 0 223050 141 0 9531 0

171 0 239482 151 0 10174 0

206 0 290850 175 0 11614 0

205 0 292368 163 0 10822 0

134 0 189192 118 0 7852 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 1 0 98 0

0 0 0 2 0 176 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 2 0 176 0

0 0 0 1 0 98 0

28 0 33556 21 0 1418 0

80 0 110960 74 0 5017 0

102 0 143460 87 0 5774 0

26 0 37132 25 0 1682 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 2 0 176 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

0 0 0 1 0 98 0

1 0 128 1 0 98 0

1 0 128 1 0 98 0

3 0 474 3 0 274 0

2 0 256 2 0 196 0

2 0 256 2 0 196 0

2 0 256 2 0 196 0

2 0 770 3 0 262 0

3 0 1770 2 0 196 0

90 0 124886 77 0 5146 0

111 0 142888 98 0 8269 0

107 0 150546 90 0 6004 0

100 0 140444 90 0 6004 0

104 0 146252 93 0 6202 0

98 0 137664 91 0 6142 0

93 0 124623 80 0 5361 0

106 0 149280 96 0 6400 0

167 0 233606 139 0 9238 0

198 0 279718 173 0 11482 0

206 0 293386 171 0 11350 0

197 0 277980 169 0 11278 0

199 0 282146 173 0 11674 0

191 0 269435 169 0 11355 0

192 0 265121 153 0 10179 0

197 0 278610 174 0 11716 0

25 0 34976 22 0 1516 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 5 0 432 0

0 0 0 3 0 287 0

0 0 0 3 0 287 0

0 0 0 3 0 287 0

0 0 0 2 0 196 0

1 0 128 2 0 196 0

4 0 594 3 0 262 0

2 0 256 2 0 196 0

2 0 256 2 0 196 0

3 0 386 3 0 262 0

3 0 402 3 0 262 0

104 0 143286 86 0 5764 0

170 0 242354 153 0 10366 0

202 0 284806 172 0 11416 0

204 0 290714 176 0 11697 0

202 0 283656 167 0 11086 0

200 0 282114 165 0 10971 0

207 0 289612 167 0 11158 0

193 0 273806 167 0 11158 0

6 0 7202 14 0 988 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

0 0 0 2 0 196 0

1 0 128 2 0 196 0

2 0 256 2 0 196 0

2 0 256 2 0 196 0

36 0 47594 27 0 1846 0

103 0 143826 92 0 6136 0

78 0 107198 69 0 4695 0

105 0 147022 91 0 6070 0

82 0 116066 74 0 4948 0

0 0 0 2 0 196 0

^C

The behavior you see here is also indicative of how the TCP stack is designed. When requested data is not being received from the server, the client lowers its frequency of making request so as to find an optimum balance of outgoing and incoming bandwidth. After a short burst of activity, the available bandwidth becomes 0, and thus the TCP stack slows down. When the bandwidth becomes available, the TCP stack is still waiting for receipt of new data, and continues still lowering its outbound traffic. On the sending (server) side the same things is happening -- the finding of the optimum balance, I mean. Acknowledgements of data receipt are not being delivered to the server, so data that was already sent is being resent (and at a decreasing size and frequency of retries) in hopes of letting the client catch up and synchronize. Eventually, maybe 3-5 seconds after bandwidth is available, the downloads resume. Sometimes, 60-90 seconds after bandwidth is available.

So, doing high-throughput downloads is practically intolerable at this time. I have found, however, that low-overhead, "burstable", communications (like SSH and HTTP) are still doable for the most part.

https://michal.guerquin.com/pinger.html, updated 2004-11-15 23:16 EST